Probabilistic Resilience Engineering

Engineering for the Age of Agentic AI

"Because the future is probabilistic, our operations must be adaptive."

The Question That Changes Everything

For decades, we asked one question about our systems: "Does it break?" That question assumed failure was binary. A crash. A timeout. A 500 error. Something you could observe, measure, and fix.

That question is now obsolete.

In the age of Agentic AI, systems don't break — they drift. An agent can respond 200 OK while confidently lying to the user. A multi-agent workflow can maintain perfect uptime while corrupting the data mesh. The lights stay on while the logic fails.

"How does the probability distribution of system behavior shift when X becomes unavailable?"

This is not refinement; it's a paradigm shift. We are no longer testing for binary failures. We are measuring the distribution shift — the slow and silent divergence away from system intent that no traditional monitor will catch.

This is the Physics of Failure. And it demands a new engineering discipline.

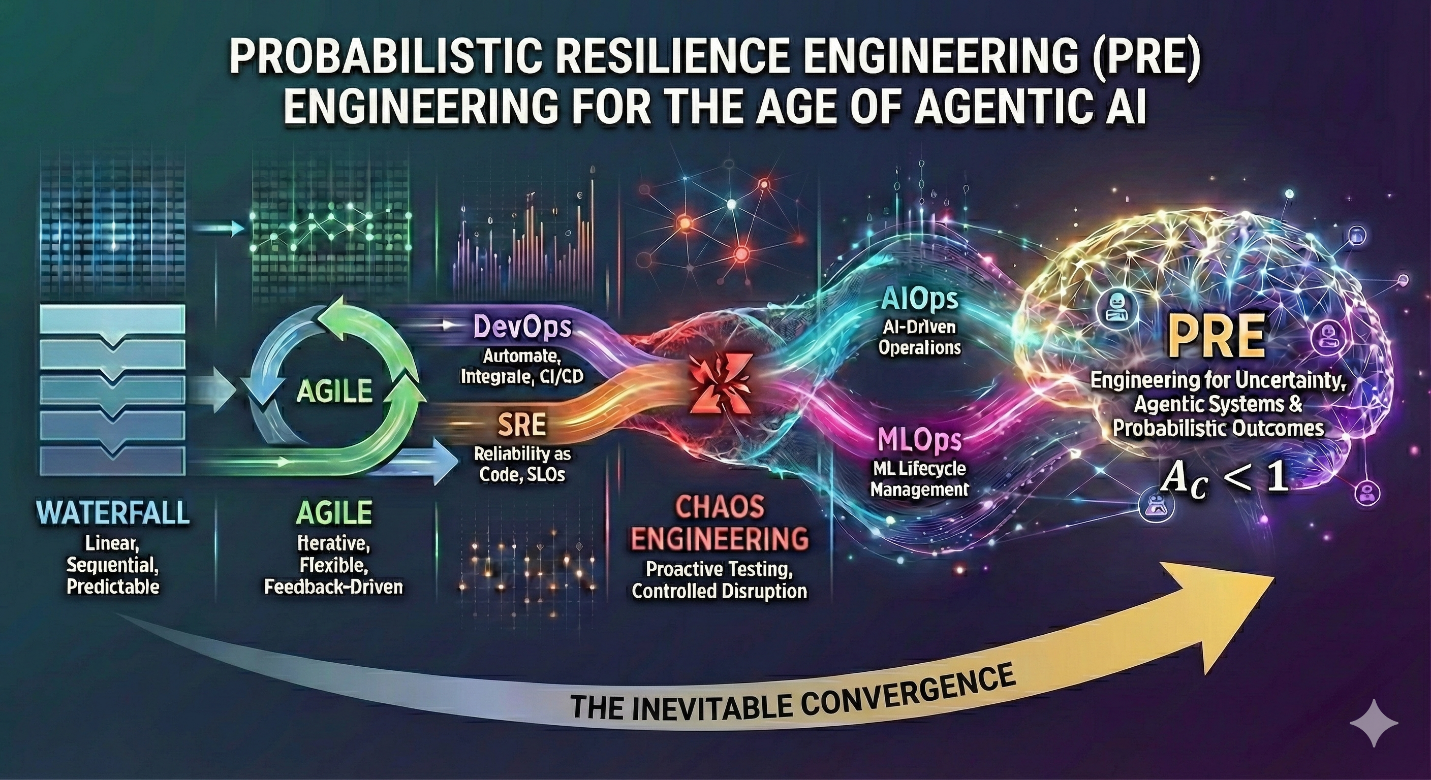

The Pivot

The software industry stands at a precipice. We are attempting to operate non-deterministic AI agents using deterministic operations playbooks designed for the monoliths of 2010. We are repeating the mistakes of the past, but at a velocity and scale that makes manual remediation impossible.

For fifteen years, Chaos Engineering has served as a critical but ultimately reactive tool. It was a test. It was an afterthought. It was a "bolt-on" verification for systems already in production. If we continue deterministic governance practices for probabilistic systems, we will repeat the bolt-on failures of DR compliance.

That era is over.

In the age of Agentic AI, we cannot afford to wait for production to discover that our agents are hallucinating or diverging. We must move from Disaster Recovery (responding to crashes — MTTR, RPO & RTO) to Probabilistic Resilience Engineering (preventing the drift — Coherence, Damping).

We propose a shift from just checking for faults and identifying system limits to architecting for Intrinsic Coherence — the state where multi-agent logic remains consistent with the system's intent, even while under stress.

The 15-Year Debt

Practitioners have known for years that something was wrong.

You've watched governance get bolted on after architecture was set. You've seen "AI safety" treated as a compliance checkbox rather than a design principle. You have felt the gap between what the dashboards display and what is actually happening in production.

The laws of thermodynamics do not care about your uptime SLA. When entropy scales faster than recovery, the system collapses. PRE is not a luxury; it is the physics of survival.

Probabilistic Resilience Engineering is not a new idea. It is the formalization of what the best engineers have been doing intuitively: treating uncertainty as a first-class citizen, building containment into architecture, measuring what matters instead of what's easy.

We are not inventing a discipline. We are naming one that already exists in scattered practices across the industry and giving it the coherence it deserves.

You have already seen this:

The trading floor engineer who built position limits as architectural circuit breakers, not just compliance checkboxes. The SRE who deployed Chaos Monkey because "you cannot trust what you have not stressed." The ML engineer who routes low-confidence predictions to human review instead of auto-actioning. The data engineer who accepts less-than-perfect pipeline reliability and builds in reconciliation.

Canary deployments. Error budgets. Confidence score routing. Graceful degradation. Bulkhead patterns. Dead letter queues. Stock exchange circuit breakers that halt trading when volatility cascades.

These are not workarounds. They're the scattered practices of Probabilistic Resilience Engineering built by engineers who understood intuitively that uncertainty is a first-class citizen, containment is architecture, and distribution matters more than state.

They designed systems where the circuit breaker couldn't be skipped because it wasn't a policy to follow — it was the physics of the signal path itself.

They've been called pragmatic. Risk-aware. Over-engineering. Paranoid.

We call them Pioneers!

And you've seen what happens when they're ignored.

The 2003 Northeast blackout cascaded across eight states because grid circuit breakers could not isolate fast enough. Fifty-five million people lost power. Air Canada's chatbot hallucinated a refund policy with no confidence routing to humans — and the company was held legally liable for the fabrication. Lawyers cited cases that didn't exist because their AI had no verification loop. Tesla's Autopilot maintains high confidence until the moment it gives up entirely, with no graceful degradation, no warning — just sudden disengagement, and fatal collisions.

These were not failures of monitoring. They were failures of architecture.

In every case, the circuit breaker existed. The governance was documented. The policy was written. And in every case, an agent — human or machine — skipped the circuit breaker.

| Pioneers | Disasters |

|---|---|

| Containment was architecture | Containment was policy |

| The circuit breaker was physics | The circuit breaker was advisory |

| Uncertainty was a signal to route on | Uncertainty was noise to suppress |

| Graceful degradation was designed | Graceful degradation was hoped for |

| The human-in-loop was the architecture | The human-in-loop was the exception handler |

The pioneers built systems where governance could not be bypassed because it was the signal path itself. You cannot "route around" a membrane you must pass through to act.

The disasters built systems where governance could be bypassed, because it was a layer on top, a policy to follow, a warning to acknowledge. And under pressure, under confidence, under deadline — it was skipped.

This is the architecture we reject.

This is why PRE must exist.

Three Laws of PRE

These are the foundational principles. They are not guidelines — they are constraints that shape everything else.

The question is not "is this correct?" The question is "is this workflow trustworthy?"

"We value trust over truth."

Correctness is a property of a single output. Trust is a property of a system over time. You cannot verify every LLM response. You can verify that the architecture containing it enforces transparency, auditability, and containment.

Do not rely on the model to be safe — rely on the architecture encapsulating it.

Safety is not a guardrail around the model. It is the signal path through which the model operates. An agent that can act without governance approval is not "unmonitored" — it is architecturally broken.

Financial exhaustion is a reliability incident.

A runaway agent that drains your token budget at 3am is not a "cost issue" — it is an outage.

"Cost is not finance — it is resilience."

Economic signals are first-class citizens in the governance loop. Runaway cost is not detected by monitoring — it is prevented by architecture that makes unbounded resource consumption a coherence violation.

The Fracture Before the Break

In 1954, two de Havilland Comets — the world's first commercial jets — disintegrated in mid-flight. Every inspection had shown green. Every system reported healthy.

The problem was invisible. Each pressurization cycle created microscopic stress fractures around the windows. Not cracks you could see. No damage any instrument detected. Just metal molecules slowly separating, flight after flight, until the fuselage would fail — even though it had not failed yet.

This pattern repeats across every domain. The I-35W bridge was rated "structurally deficient" for seventeen years before it collapsed during rush hour. Challenger's O-ring risk was documented the night before launch — but governance was advisory, and management overrode. In 2008, financial systems showed healthy balance sheets while invisible correlations accumulated toward inevitable collapse.

These systems weren't broken. They were in a Fragile State.

They maintained coherence by exhausting hidden capacity. Each stress cycle depleted reserves that no dashboard measured. They looked stable — right up until when they catastrophically were not.

This is why we measure the Coherence Tension Index: not "is the system coherent?" but "at what cost?" High CTI is the stress fracture before the break — a system not broken yet, but thermodynamically destined to fail.

Traditional observability will never see it. You cannot measure accumulated stress by asking "is it working?" Only by asking "how hard is it working to stay coherent?"

The Physics of Failure

The Thermodynamics of Agentic Systems

In deterministic systems, failure is binary. In probabilistic systems, failure is a distribution shift. We need metrics that capture this reality.

The metrics we need to measure the Physics of Failure are already available — confidence, tokens, latency.

We do not measure uptime. We measure the propagation of uncertainty.

$$H_{cascade} = -\sum p(x_i) \log p(x_i)$$

Where p(xi) represents the probability distribution of agent outputs at a boundary — how uncertain or divergent the signals are. This is Shannon entropy applied to error propagation. Not metaphor — measurement.

"The New SLA!"

This is the single most important number in probabilistic resilience:

$$A_C = \frac{\Delta H_{downstream}}{\Delta H_{upstream}}$$

In plain language: Did the system amplify the error, or contain it?

- If AC > 1: The system is an amplifier. The lie is growing.

- If AC < 1: The system is a damper. The error is being contained.

This is the new SLA. Not "five nines of uptime." Not "p99 latency under 200ms." But: "AC < 1 under stress."

Traditional monitoring misses "silent failure." A system may maintain high coherence only by exhausting its resources — this is the Fragile State.

$$CTI = \frac{\text{Coherence Load}}{\text{Adaptive Capacity}}$$

High CTI indicates a system that is not broken yet — but is thermodynamically destined to fail. It is the stress fracture before the break.

The Hallucination Cascade

Here is the failure mode that defines the probabilistic age:

Agent A receives a query. It hallucinates a fact with moderate confidence (0.65). Agent B receives A's output as input, treats it as grounded truth, and builds on it with high confidence (0.89). Agent C synthesizes B's output into a decision with certainty (0.97).

The lie didn't just propagate. It grew.

This is the Hallucination Cascade — and it is invisible to traditional observability. Every agent returned 200 OK. Every latency metric was green. The dashboards showed healthy while the system confidently corrupted its own outputs.

The Semantic Circuit Breaker exists to catch this. It fires not because the server crashed, but because the physics of the conversation became unstable. When AC crosses 1.0, when the injected uncertainty is amplified rather than damped, the circuit opens automatically — without waiting for human verification.

You cannot rely on AI to evaluate AI. That just adds another liar into the chain. You measure the signal, not the judgment.

This is not theoretical.

We demonstrate this exact cascade — and the circuit breaker that stops it — live.

The Constitution of Resilience

The Game Theory of the Mesh

We cannot rely on the "goodwill" of autonomous agents. We must enforce a Cooperative Equilibrium. Just as human societies require a Bill of Rights, our Agentic Mesh requires "non-negotiable" invariants that cannot be bypassed.

The Five Mesh Invariants:

- Recovery Arc Mutual Awareness — "Broadcast before act." No agent attempts recovery without signaling the mesh.

- Escalation Triggers — "Thresholds over feelings." If N recovery attempts fail or T duration passes, the agent yields to an arbiter. No negotiation.

- Resource Reservation Semantics — "Declare before execute." CPU and token limits are negotiated, not seized.

- Circuit Breaker on Recovery Itself — "No infinite loops." The mesh detects and severs recursive recovery attempts.

- Equilibrium Audits — "Trust and verify." Continuous polling of the state, not just the output.

These are not policies. They are architectural constraints. An agent cannot "route around" a signal that is physically required for it to act.

Adaptive Resilience Operations (AROps)

The Practice of PRE and the Civics of Emergence

If PRE is the paradigm, AROps is the practice. We reject the notion that AI is a "black box" that cannot be governed. We establish the Golden Rule:

"Emergence Authority follows Coherence Boundary." You cannot authorize what you cannot measure.

The Three Authority Arcs

When your hand touches a hot stove, you don't think. You don't evaluate. You don't consult. Your hand is already moving before you feel pain.

The signal travels to your spinal cord and back to your muscles — it never reaches your brain. This is architecture, not policy. You cannot decide to leave your hand on the burner.

The Reflex Arc in AROps works identically. When semantic drift exceeds threshold, the circuit breaker trips. No LLM reasoning. Sub-2ms response. The signal that would propagate the hallucination physically cannot pass.

The first time your body encounters a pathogen, the response is slow. Your immune system scrambles, trying different antibodies, learning what works. The second time? Annihilation in hours.

Your immune system remembered. The successful pattern was amplified and stored.

The Adaptive Arc in AROps works identically. When successful patterns emerge — recovery strategies that work, routing decisions that improve coherence — they propagate across the mesh. What worked gets amplified. What failed gets pruned.

You don't use your prefrontal cortex to pull your hand from a hot stove. You use it to decide who to marry. Whether to take the job. What you stand for.

The prefrontal cortex is the seat of executive function — long-term planning, ethical reasoning, identity-defining choices. It's slow. It's deliberate. It considers consequences across years, not milliseconds.

The Governance Arc in AROps handles high-level architectural decisions, ethical boundaries, and strategic direction. The questions that should involve human deliberation — not because the system can't decide, but because these decisions define what the system is.

| Arc | Human System | Speed | Question Answered |

|---|---|---|---|

| Reflex | Spinal withdrawal reflex | Milliseconds | "Is this dangerous RIGHT NOW?" |

| Adaptive | Immune memory | Hours to days | "What worked that we should remember?" |

| Governance | Prefrontal cortex | Days to years | "Is this who we are?" |

AROps respects the architecture your body already knows: fast things fast, slow things slow, and never confuse which is which.

The Practitioners

AROps practitioners are Resilience Architects, Cascade Analysts, and Drift Engineers.

They will help create the probabilistic operational practices of the future as they learn how to suppress and contain semantic drift, adapt and amplify emergent patterns extending learning to the mesh, and finally evaluating and evolving the governance of architectural and ethical boundaries.

The Mission

This manifesto exists for a reason beyond technical correctness.

For too long, sophisticated resilience engineering has been the exclusive province of large companies. The tooling is expensive. The expertise is scarce. The playbooks are proprietary.

We reject this.

Probabilistic Resilience Engineering is open by design. AROps is open source. The physics belong to everyone.

Our mission is democratization: to give every organization the benefit of 15 years of production learning. To help them leverage what others have discovered. To create something that lasts beyond any product, any company, any individual.

Like Agile, PRE and AROps will thrive through open collaboration, not proprietary tooling.

AI resilience is not an operational luxury — it is an infrastructure imperative, as we increasingly rely on AI systems.

The Deliberate Gap

We name phenomena we cannot fully explain.

This document introduces the vocabulary to help us and questions to guide us. It names the paradigm. It presents the foundational metrics and principles.

It does not present a finished science.

The full measurement framework is being formalized through academic collaboration and production validation. The implementation playbooks will emerge from community practice. The edge cases, the failure modes we haven't yet imagined, the refinements that only come from experimentation and scale.

We are not handing you a completed discipline. We are inviting you to help build one.

Your production experience will validate or refute what we propose here. Your edge cases will sharpen the physics. Your implementations will prove what works and expose what doesn't.

This is chapter one. The rest is written together.

The Invitation

To Practitioners: You have felt the gap between what the dashboards show and what's actually happening. You have carried the cognitive load of the systems that "work" but cannot be trusted. This framework is for you. Use it. Break it. Improve it. Tell us what we got wrong.

To Researchers: The metrics presented here need validation at scale. The relationships between cascade entropy, coherence tension, and system topology are unexplored. We have named phenomena we cannot yet fully explain. Help us formalize what we're observing. Cite this, extend this, challenge this.

To Leaders: The transition from policy-layer governance to architectural governance is not optional — it is inevitable. The only question is whether you navigate it deliberately or are dragged through it by incidents. We offer a roadmap. The bridge from where you are to where you must be.

To Everyone: Join us in building the resilience discipline that AI systems deserve. Not because it's intellectually interesting — though it is. But because the systems we're building will touch every aspect of human life, and they must be worthy of that responsibility.

Start Here

Watch. Run. Validate. Break it. Tell us what we got wrong.

Conclusion

We are building the nervous system for the next generation of software. We are moving from "keeping the lights on" to "keeping the logic true."

Probabilistic Resilience is the recognition that AI will drift, will hallucinate, and fail in ways that don't trigger alerts.

Adaptive Resilience Operations is the discipline that catches the drift before it becomes damage.

"We are building the suspension system, not just the brakes."

The future is probabilistic. Our operations must be adaptive.